In recent years, modern techniques in deep learning and large-scale data sets have led to impressive progress in 3D instance segmentation, grasp pose estimation, and robotics. This allows for accurate detection directly in 3D scenes, object- and environment-aware grasp prediction, as well as stable and repeatable robotic manipulation.

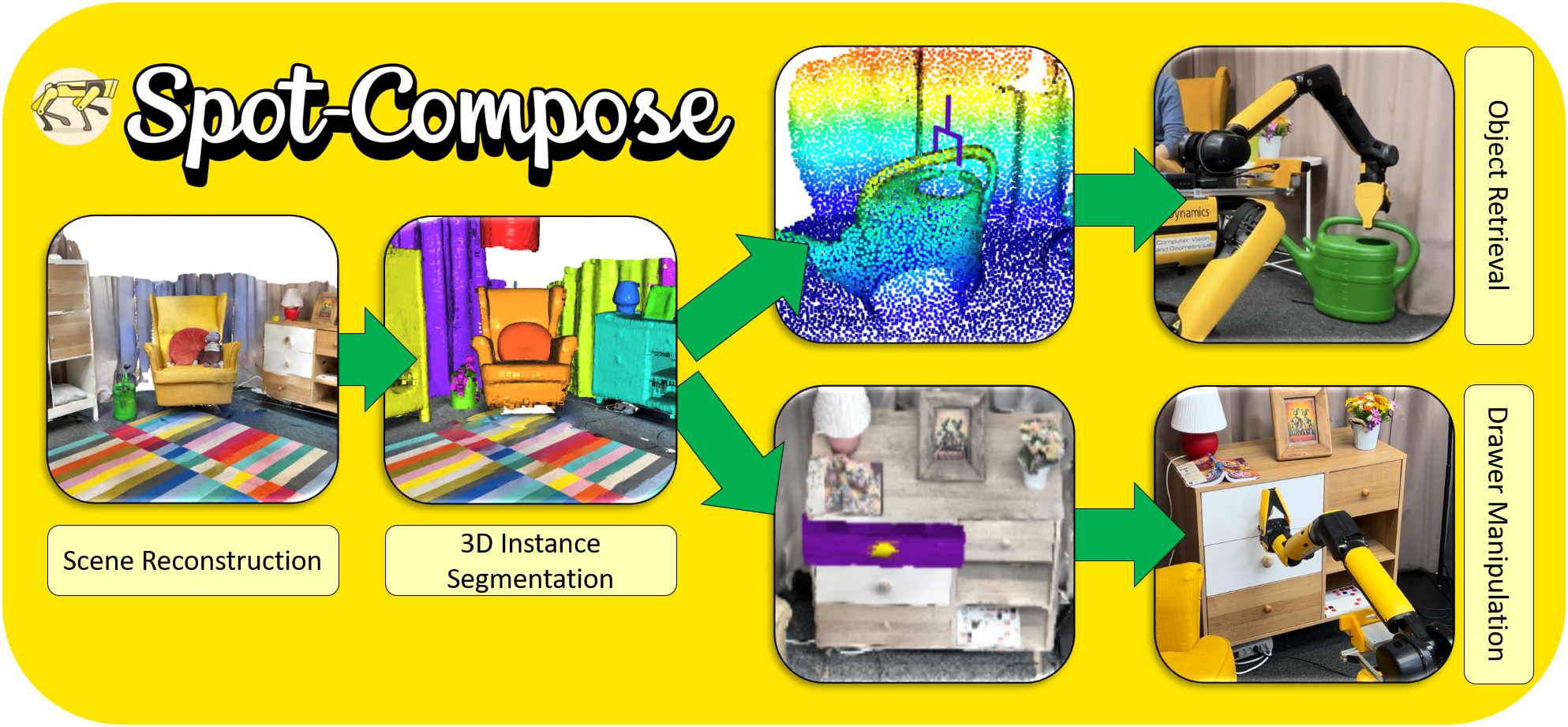

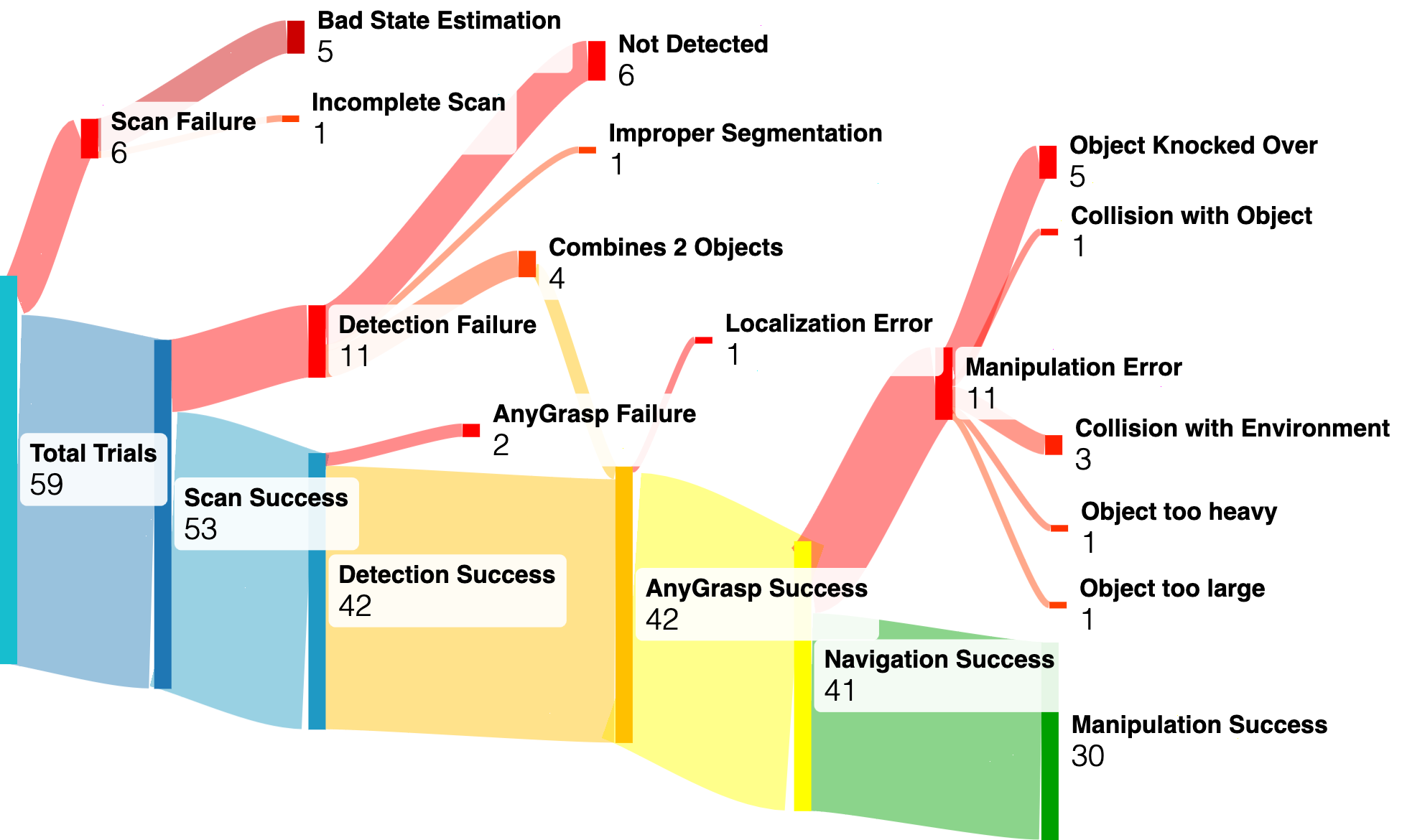

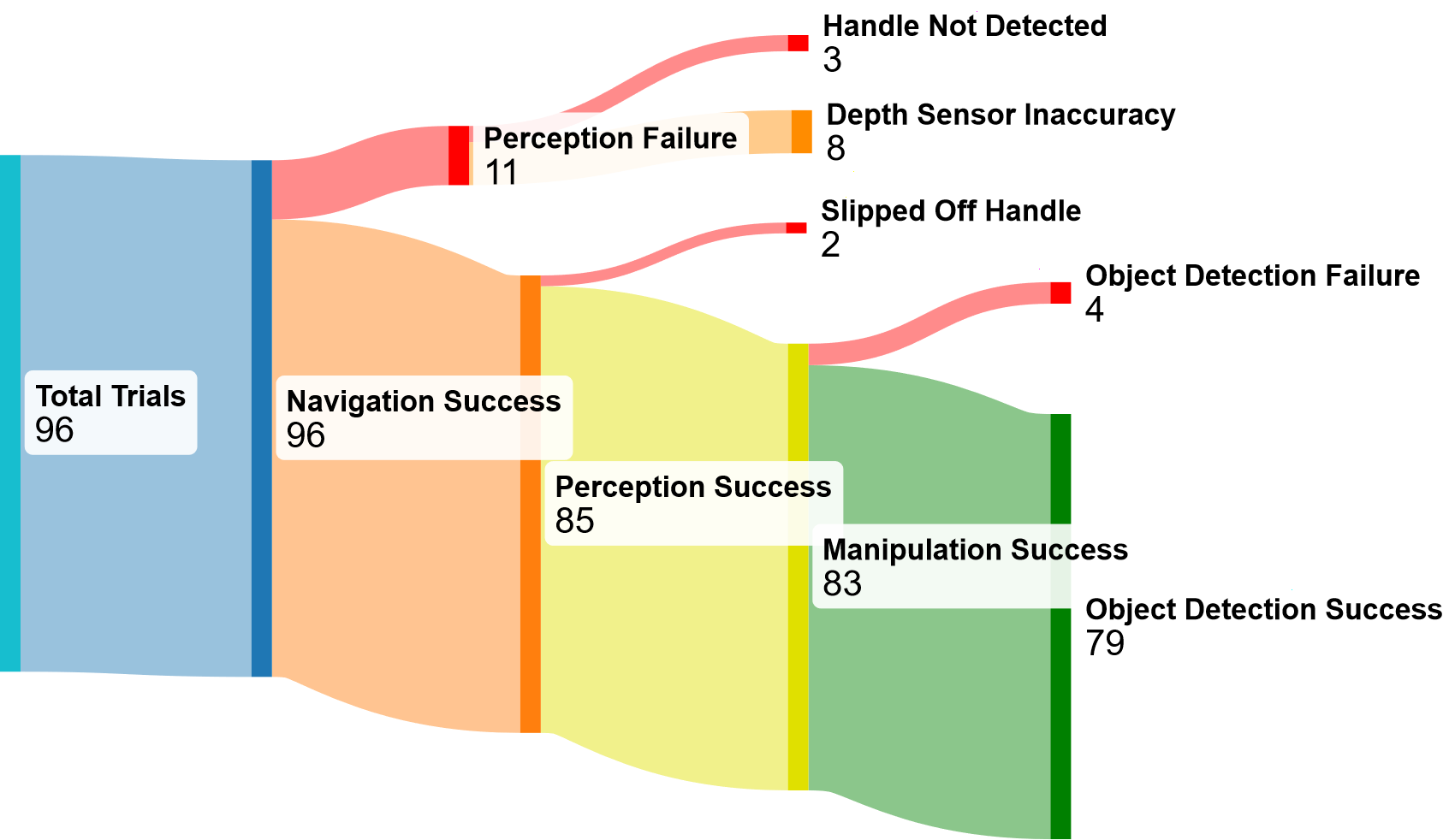

This work aims to integrate these cutting-edge methods into a comprehensive framework for robotic interaction in human-centric environments. Specifically, we leverage high-resolution point clouds from a commodity scanner for open-vocabulary instance segmentation, alongside grasp pose estimation, to demonstrate dynamic picking of objects and opening of drawers. We show the performance and robustness of our model in two sets of real-world experiments evaluating dynamic object retrieval and drawer opening, reporting a 51% and 82% success rate respectively.

Using Spot-Compose we can perform a variety of real-world tasks. The quantitative results of our experiments are shown here.

@inproceedings{lemke2024spotcompose,

title={Spot-Compose: A Framework for Open-Vocabulary Object Retrieval and Drawer Manipulation in Point Clouds},

author={Oliver Lemke and Zuria Bauer and Ren{\'e} Zurbr{\"u}gg and Marc Pollefeys and Francis Engelmann and Hermann Blum},

booktitle={2nd Workshop on Mobile Manipulation and Embodied Intelligence at ICRA 2024},

year={2024},

url={https://openreview.net/forum?id=DoKoCqOts2}

}